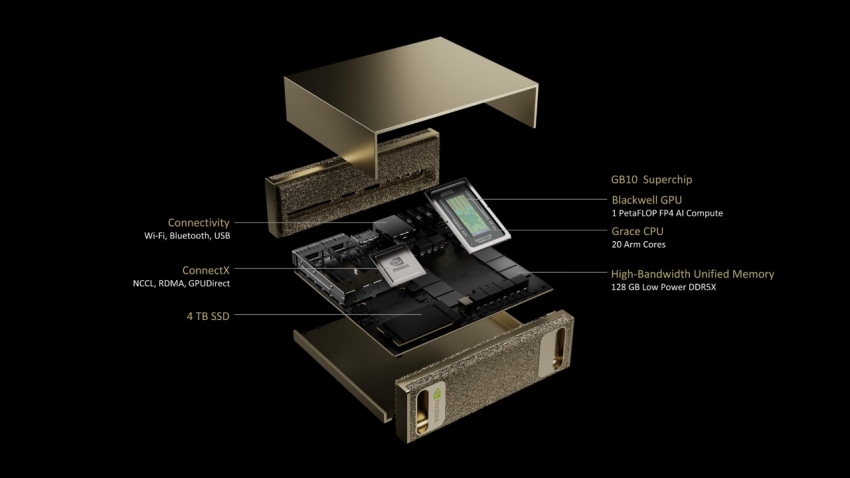

At CES, Nvidia just announced at Project Digits which is branded as your “personal AI Super Computer.” What makes this interesting:

- 128GB of memory is enough to run 70B models. That can open up some new experimentation options.

- You can link a couple of these together to run even larger models. This is the technique used for data center shizzle, so bringing this to the desktop is cool.

- The Nvidia stack. Having access to the Blackwell architecture is sweet, but the secret sauce is the software stack, specifically CUDA. This is really Nvidia’s moat that gives them the competitive advantage. Build against this, and you can run on any of Nvidia’s stuff from the edge on up to hyperscale data centers.

If you are a software engineer, IMO it’s worth investing some $ in this type of hardware vs. spinning up cloud instances to learn. Why? There are things you can do locally on your own network that allow you to experiment/learn faster than in the cloud. For instance, video feeds are very high bandwidth that are easier to experiment with locally than pushing that feed to the cloud (and all the security that goes with exposing outside your firewall.)

Some related posts….

https://www.seanfoley.blog/visual-programming-with-tokens/

https://www.seanfoley.blog/musings-on-all-the-ai-buzz/